So, Jonah is moving and he asked me to write a guest post. Jonah’s recent articles about computing prompted me to write about distributed computing. The question I will answer is: how do you go from computing with a sequential program to computing on many core machines (aka Parallel Computation)?

Parallel Computation

First of all, what is parallel computation? In a nutshell, parallel computation is the science which allows you to use a many processors to compute faster. You certainly would want to do this if you worked on the stock market where the faster you are at calculating the faster you can buy and sell and make money. If you are trying render a TV show you’ll want to finish it before it is supposed to air. Many newer computers have many processors in them allowing them to take advantage of this speedup gained from using them.

I now want to discuss what it means to have a sequential program, which is the case when only one processor is used. To solve most problems you have a sequence of steps you must complete to get an answer. For example, suppose you want to find the sum of the numbers:

1 2 3 4 5 6 7 8

Computers can only do binary addition, that is add two numbers at the same time. Because of this we must add only two numbers at a time. Two possible ways are outlined below:

(((((((1 + 2) + 3) + 4) + 5) + 6) +7) +8) +9

or like this

((1 + 2) + (3 + 4)) + ((5 + 6) + (7 + 8))

The second way might not seem obvious but it lends itself to parallelization so we’ll use it as our primary example. Since we are only adding pairs of numbers we can give each pair a letter to represent it. So, in this case A represents the action of adding 1 and 2 and B represents adding 3 and 4, and so on. It’s a bit more abstract but E equals the sum of the results from A and B and similarly for F and G.

((1 + 2) + (3 + 4)) + ((5 + 6) + (7 + 8)) = ((A + B) + (C + D)) = (E + F) = (G)

To exemplify the sequential case: suppose you have a single processor which can only do one operation at a time. It would look like a single channel which you could send commands to which would be operated on sequentially.

====================================

CPU 0 | A B C D E F G

====================================

This is called the sequential method where only one processor is utilized. If each step took 1 second to complete, this code would take 7 seconds. The steps could be performed in other orders as long as A and B come before E, C and D before F, and E and F before G. If this wasn’t the case, you wouldn’t have the output from the steps before and the code wouldn’t work.

Now, the trouble occurs when you have two processors. How do you decide how to run the commands in parallel? This example is pretty straight forward, you can only use commands that have left and right answers available for them. Using the example above, an efficient order could be

=====================================

CPU0 | A | B | E | G

=====================================

CPU1 | C | D | F |

=====================================

Here A and C, B and D, and E and F are all performed in parallel. We can’t parallelize G as it just adds the two last numbers together and that can only be run on one processor. Now, again if each operation only takes one second then this would take 4 seconds. We seemed to have achieved a 3 second speed up in our code.

Flynn’s Taxonomy

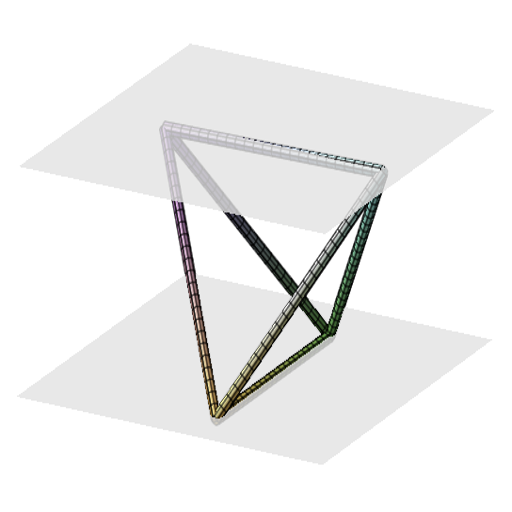

Our example before this outlines one of the most common ways to run a program in parallel. However, there are many way to do multi-processing. To help identify the different types Michael J. Flynn created a taxonomy. His taxonomy, simply called Flynn’s Taxonomy, splits all programs into four groups denoted by SISD, MISD, SIMD, MIMD. You can visualize the taxonomy in the following graphic:

The four types are separated into if they have one or more of each an instruction set and data set. The four combinations yield the four types in Flynn’s Taxonomy.

The four types with their names expanded are as follows:

- SISD – Single Instruction Single Data

- MISD – Multiple Instruction Single Data

- SIMD – Single Instruction Multiple Data

- MIMD – Multiple Instruction Multiple Data

The sequential addition code is an example of the SISD type, and our parallel addition example is illustrative of the SIMD (Single Instruction Multiple Data) type. This is because each processor does the same thing, take four numbers and add them pairwise. However, CPU0 gets the numbers 1 2 3 4 and CPU1 gets 5 6 7 8. This means each set of instructions get a unique set of inputs.

So, you might ask, how do the other two types come into play. Well the MISD case can be an instance when say you have medical records of many patients, and you want to know if your model (A) predicts cancer better than another model (B). You would want to check it on the same data but you want to run two different instructions or models; in this case A and B.

As for MIMD, this usually comes about when processing large amounts of different data. For example, suppose you are Google and you have billions of photographs. If you want to store them in the most compact form possible you’ll probably want to compress them. Since different photos lend themselves to different compression algorithms you might want to compress it with two different algorithms (the instructions) and keep the best one. I’m not saying this is how they do it but it does illustrate an instance where you might use a MIMD type program.

An Example of Parallel Code

Weather simulations need to be fast and accurate. To accomplish the accuracy, software divides the surface of the earth up into little squares. Each square represents the whole area and contains the data for its temperature, humidity, wind, etc. To make the simulation doable the whole area is represented as a single point usually located in the center of the region.

To simulate the weather, the program will move air from such one point to another to represent wind. The wind carries water and clouds which change the temperature and, not to make it sound too simple, changes the weather. By simulating this we can predict to a reasonable extent how the weather will unfold tomorrow.

Of course, if the region is too large it will not reflect the weather accurately. For instance, if the region was chosen to be the size of the US, it would represent all the weather in the US as the same, which certainly isn’t true. The size of a region is usually about the size of a large neighborhood as seen below:

There is a cost to choosing a small area, you need more memory to store all the information for all of the regions. Additionally, each time you add more regions you must do computations on those new regions increasing the computing time.

To overcome this, the National Weather Society (NWS) predictions weather on large distributed computer systems putting a few areas onto each computer. Then each computer applies the same instructions to each set of areas progressing the simulation one small amount of time into the future. This is then done many times until the future time is reached.

This way they can have predictions about tomorrow’s weather before tomorrow gets here.

The Trouble With Parallelized Programs

At this point it might seems as if we could continue to use more computers and get better and better performance. This would be nice but we run into the issue that not all code is parallelizable. Remember the sum of numbers problem, the last step required us to use only one processor to add the two final numbers together. In fact if we add more processors to that example we see more steps that can only be run sequentially.

=====================================

CPU0 | A | E | G

=====================================

CPU1 | B | F |

=====================================

CPU2 | C |

=====================================

CPU4 | D |

=====================================

Here, if we assume each step takes a second, we use 3 seconds. This means when we have 1 processor it took 7 seconds, with 2 it took 4 seconds, and with 4 it took 3 seconds. It seems as we add more processors we get a diminishing return in speedup. In fact if we add more than four there will be no additional speedup. This fact is called Amdahl’s Law.

Amdahl’s Law gives us a set of equations which depend of the portion of the code that is parallelizable and number of processors used. The graphic above shows us that for any proportion of parallelizable code, there is an upper limit to the speedup. Past a certain point, it is not possible to get more speed by adding more processors.

This fact has forced people to look for alternative methods to speedup their code. Most of the time they find better algorithms and mathematics which simplify what they must do to solve a problem and this make it run faster.

Conclusion

Parallel computing makes it possible to do large calculations in a timely manner but it is not a simple situation and there are certainly caveats to be aware of. But as computers become more parallel (which is a discussion for another day), parallel computing becomes a bigger and bigger deal. For example, Google recently created a system for processing big data in an efficient way called MapReduce.

Jonah uses very parallel computers to study gravitational physics and he’ll discuss the intricacies of scientific computing in this environment sometime soon.

Hope this inspired some of you to investigate further. Feel free to ask any questions you might have.

One thought on “A Parallel Computing Primer”

Comments are closed.