Editors Note: This week, I’m busy with final exams here in Guelph, so my good friend Michael Schmidt has graciously agreed to do a guest post. Thanks, Mike!

Hi everyone! Since last time I decided to talk about the basics of probability, I thought this time I would expand on that subject. In part 1, I discussed how to count different possible outcomes of random events and determine the likelihood of particular events. If you have not read that, or it’s been a while, you should read over Part 1. This method is great when where are relatively few possible states but becomes burdensome when you introduce more complicated setups. As usual, when you try to model things like people and their actions there are many random factors which are not always easy to predict. Enter distributions, a wonderful tool for troublesome situations!

The Problem

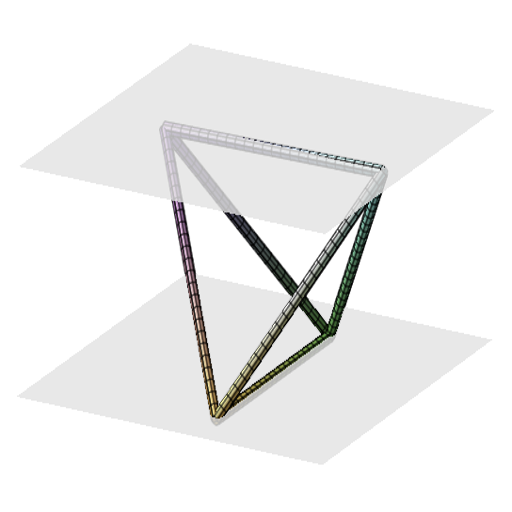

One of the most basic problems where the total number of states becomes troublesome is Galton’s Board. Galton’s Board (or the bean machine) is a panel with pegs, arranged in rows, a channel where balls or beans flow onto the topmost row of beans, and collection channels where the balls exit the rows of pegs and are stacked for counting.

The reason this is of interest is whenever a ball hits a peg it can go left or right. If the machine is built with enough care, the ball will have a 50% probability of going left and 50% probability of going to the right. As you can see in the following image, the ball will encounter a peg many times on the way down. At each peg the question of direction will be revisited at which time the ball may change directions.

Interestingly, there are many paths that lead to the same bin. This means we have to count up all the possible paths to figure out what the probability of finding the ball in a particular bin might be.

Let us look at the leftmost bin and see what paths end up there. It seems there is only one path, that is at each peg the ball goes left. If at any time it goes right, it will be unable to get back. Since there is only one path we multiply the probabilities of going left by each other and multiply it by one, the total number of paths. This gives us a probability of ![]() , which turns out to be

, which turns out to be ![]() in our example. The second bin on the left, however, has multiple paths. It requires the ball to go right only once at any point. Since there are

in our example. The second bin on the left, however, has multiple paths. It requires the ball to go right only once at any point. Since there are ![]() pegs, the ball may go right once at any of those points; this gives us

pegs, the ball may go right once at any of those points; this gives us ![]() different paths. This means the total probability is

different paths. This means the total probability is ![]() which in our example is

which in our example is ![]() . The third bin from the left is a little more tricky. It turns out it requires two right bounces. This means there are

. The third bin from the left is a little more tricky. It turns out it requires two right bounces. This means there are ![]() different paths. Here,

different paths. Here, ![]() is the binomial coefficient or choose function. It gives the total number of ways you can choose

is the binomial coefficient or choose function. It gives the total number of ways you can choose ![]() objects from a total of

objects from a total of ![]() . The algebraic form of the choose function is

. The algebraic form of the choose function is

![]()

This allows us to know how many total paths there could be. In the case above, ![]() so the total number of paths is

so the total number of paths is ![]() . The probability is therefore

. The probability is therefore ![]() which is

which is ![]() in our example. This trend continues in the same fashion which gives us a general form for bin

in our example. This trend continues in the same fashion which gives us a general form for bin ![]() to be

to be

![]()

If the machine were not built well there could be a bias to one side or another. To model this we can prescribe different probabilities to left or right action. In that case we get the following probability per bin:

![]()

where ![]() is the probability of the ball falling to the left and

is the probability of the ball falling to the left and ![]() is the probability of the ball falling to the right. This is known as the binomial distribution.

is the probability of the ball falling to the right. This is known as the binomial distribution.

Probability Distributions

What is a distribution? In short, it is a ways of laying out different bins or groups and prescribing probabilities to each of them. Most everyone is familiar with the bell curve; the bell curve is, as it turns out, a distribution. In math circles, the bell curve is usually referred to as the Normal Distribution. The normal distribution lets us model the results of many random trials which can interact with each other. This is usually the case for exam grades and the like. Each person taking the test has had a large number of different experience which have prepared them for the exam questions. Since this is the case you would expect the grades to fall along a distribution like that below:

You may now ask how this connects with the previous distribution. The answer is if we have a large number of rows of pegs then we will start to get curves that look more and more like the normal distribution. Below, I’ve included an animation of a binomial distribution when the number of pegs is increased.

In fact this trend to always begin to look like the normal distribution isn’t a coincidence but rather this will always happen when a large number of random data is taken. There are some conditions on that statement but I’ll leave that to those who are curious. This property is called the Central Limit Theorem. This fact means there is a lot we can learn about random events if we study the normal distribution.

Some Things About The Normal Distribution

The normal distribution is interesting as it’s mean and median are the same. That is the average value is also the value that splits the population into two even groups. This value is represented in the general equation below as ![]() . In addition, the width of the normal distribution is also characterized. This term is called the variance or standard deviation,

. In addition, the width of the normal distribution is also characterized. This term is called the variance or standard deviation, ![]() . While these two have different strict mathematical definitions, you can think of this term as dialing in the width. Pictorially, this is represented by the following diagram:

. While these two have different strict mathematical definitions, you can think of this term as dialing in the width. Pictorially, this is represented by the following diagram:

and we can express the functional form as

![]()

The power of this distributional representation of possible outcomes is you can look at the sum of the little areas under the curve and get an approximation for the percentage of events within that range. (For experts, this is just the integral of the distribution between the two points.) For example, suppose you had test scores that fell along a distribution with a mean of 60% and a standard deviation of 10%, this would result in a curve like that below.

In this figure I’ve highlighted the range from 70% to 100%. This area represents 15.9% of the test-takers meaning we expect 15.9% of the total population to have scored above 70%.

How Distributions Apply To Physics

Distributions are particularly helpful to quantum physics as they can be used to describe where a particle might be found. Suppose a particle is trapped in a box, the particle’s position will be probabilistic, meaning it is not localized in any particular part of the box but rather, there are place where it is more likely to be found. I won’t now go into the details but it can be shown that a particle is, in its lowest energy state, distributed like so between two impassible barriers:

As you can notice, the most likely place for the particle to be found is in the middle of the box. In fact 50% of the time in with be found within the following highlighted area:

The second energy level is a bit more curious of a distribution, it looks like this:

The distributions in quantum mechanics will continue to behave even more interestingly as the setups get more complicated. However complicated they become, the methodology outlined here is the same. Probability underpins all of quantum mechanics and, hopefully, I’ve equipped you with a little more understanding.

It does seems strange the quantum world acts with such indeterminacy. This notion is certainly distressing as our macro experiences of the physical world are so predictable, however, it seems to stand the tests of science. Einstein famously disagreed with the idea that nature was intrinsically random by saying: “God does not play dice”. While we are not certain nature is random, our experiences lend credence to that effect. Since quantum mechanics has existed, it’s theories have been instrumental in our understanding of nature and it has lead to the creation of lasers, microscopes, computer hardware, and countless other technologies.

Further Reading

Jonah says: If you liked Mike’s post, you might also enjoy the articles I wrote about quantum mechanics.

- To get you started, I wrote about particle wave duality. This is part one of a three part series.

- Part two was an article on the Bohr model of the atom.

- And part three was an article on matter waves.

- I explain Heisenberg uncertainty principle in this article.

- I explain the Pauli exclusion principle in this article.

- I explain quantum tunneling in this article.

- I explain the Feynman path integral here. You may need to know about imaginary numbers for this one. Fortunately I’ve written about those.

You lost me at the binomial coefficient. How does 0.5^6 equal a probability of 23.4%?

OK, I get it it’s 15*(0.5^6) – my bad.

Very very very interesting. The binomial distribution graph really gets the brain going. Also, kind of funny.. Michio Kakus response to Einsteins famous no dice statement, “Well..Hey, get used to it Einstein was wrong. God does play dice. Every time we look at an electron it moves. There’s uncertainty with regards to the position of the electron.” Anyways, good luck with all finals.