As theCOVID-19 situation has unfolded, one thing that has helped me process what’s going on is a look at the basics of how experts are making predictions about the severity of the epidemic. I wrote up a little of my findings. Maybe this writeup will help you process too. You can find the writeup, which is a mix of math and code, on github. https://github.com/Yurlungur/mathematics-of-epidemics Stay safe out there, everyone. We’re all in this together.

Astrophysics / cosmology / Physics / etc.

Dark Energy, Numerical Relativity, and Astrophysics

So I did an interview for the “Tilting at the Universe” podcast. In it, I describe: the history of dark energy and the expanding universe, how the mystery of dark energy may be solved once we reconcile quantum mechanics and general relativity, how the astrophysics of black holes and neutron stars may help us understand quantum gravity, and how my field of numerical relativity fits in to all of this. I think I did a pretty good job of explaining what excites me about the field. So check it out. The interview is here. In the interview, I mention

Physics / Relativity / Science And Math

The Direction of LIGO’s Gravitational Waves

On September 14th, 2015, the LIGO gravitational wave observatory network detected the gravitational waves from the merger of two black holes. In moments, the LIGO team estimated (very broadly) where the black holes were located in the sky; these regions are highlighted in figure 1. Today I tell you how they figured this out. And why it’s important. Electromagnetic Counterparts First, let’s talk about why the direction of the waves is important. When LIGO detects gravitational waves, those waves can tell us an awful lot about their source. Just from the waveform, LIGO learned that the waves from December

Astrophysics / Physics / Relativity / etc.

The Black Holes that Created LIGO’s Gravitational Waves

A little over a week ago, the LIGO collaboration detected gravitational waves emitted during the in-spiral and merger of two black holes. And the world’s scientists, myself included, collectively went bananas. Last week, I attempted to summarize the event and capture some of the science, and poetry, that has us so excited. In short, gravitational waves provide us a totally new way to look at the universe. LIGO’s one detection has already provided us with a wealth of information about gravity and astrophysics. Today, I summarize some of what we’ve learned. Black Holes As We Knew Them In the

Astrophysics / Physics / Relativity / etc.

The Poetry of LIGO’s Gravitational Waves

Yesterday the LIGO scientific collaboration announced that they had detected the gravitational waves from the in-spiral and merger of two black holes, shown in figure 1. It would not be an overstatement to say that this result has changed science forever. As a gravitational physicist, it is hard for me to put into words how scientifically important and emotionally powerful this moment is for me and for everyone in my field. But I’m going to try. This is my attempt to capture some of the science—and the poetry—of LIGO’s gravitational wave announcement. The Source About 1.3 billion years ago

Astrophysics / Physics / Relativity / etc.

The Geodetic Effect: Measuring the Curvature of Spacetime

A couple of weeks ago, I described the so-called “classical tests of general relativity,” which were tests of early predictions of the theory. This week, I want to tell you about a much more modern, difficult, and convincing test: A direct measurement of the curvature of spacetime. It’s called the geodetic effect. This is the eighth post in my howgrworks series. Let’s get to it. We know from general relativity that gravity is a distortion of how we measure distance and duration. And that we can interpret this distortion as the curvature of a unified spacetime. When particles travel

Physics / Science And Math

Book Review: Beyond the Galaxy

Earlier this year, I was asked to review Ethan Siegel’s, upcoming book Beyond the Galaxy, shown in figure 1. I got an advanced copy and dug in and I really loved what I found. With Ethan’s permission, I wanted to repost my review here so you can all read it. The Review The history of science is filled with ideas that were once compelling, but have since been ruled out by empirical evidence. Ethan Siegel’s Beyond the Galaxy understands this fundamental truth of science. With eloquence and clarity, Siegel tells us the story of the universe, from the (inferred)

Physics / Quantum Mechanics / Relativity / etc.

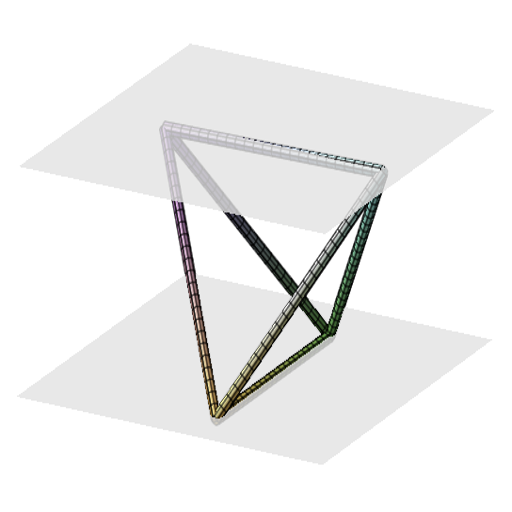

The Holometer

You may have heard the buzz about the holometer, shown in figure 1, before. It’s a giant laser interferometer, much like those used to search for gravitational waves, designed to detect quantum fluctuations in the fabric of spacetime. At least, that’s the claim. The holometer just released a preprint of their first science paper. And of course, a Fermilab press release appears in Symmetry Magazine. The article is good, and I recommend you read it. And the holometer experiment is good, interesting science. But I have to say, I’m extremely annoyed by how much the holometer team is overselling their

Physics / Relativity / Science And Math

Classical Tests of General Relativity

Last Wednesday, November 25, was the 100 year anniversary of general relativity. It was the precise day that Einstein presented his field equations, shown in figure 1, to the world. In celebration of this anniversary, today I present to you some of the early triumphs of general relativity, classical predictions of the theory that have been precisely tested and where theory has exquisitely matched experiment. This is the sixth instalment of my howgrworks series. Let’s get started. The Perihelion of Mercury Before Einstein, we believed that the motion of planets in the solar system were governed by Kepler’s laws

Science And Math

Conference Report: SC15

This last week, I had the privilege to attend the biggest annual supercomputing conference in north America, SC. I was one of about ten students studying high performance computing (and related fields) who were funded to go by a travel grant from the HPC topical group of the Association for Computing Machinery. It was a blast, and I learned a ton. I haven’t had much time to write up any science results, so I figured I’d give a few brief highlights of the conference, if I could. Vast Scale SC15 was by far the biggest conference I’ve ever attended.